CAVAPA

Measure the Motion of Groups from Video

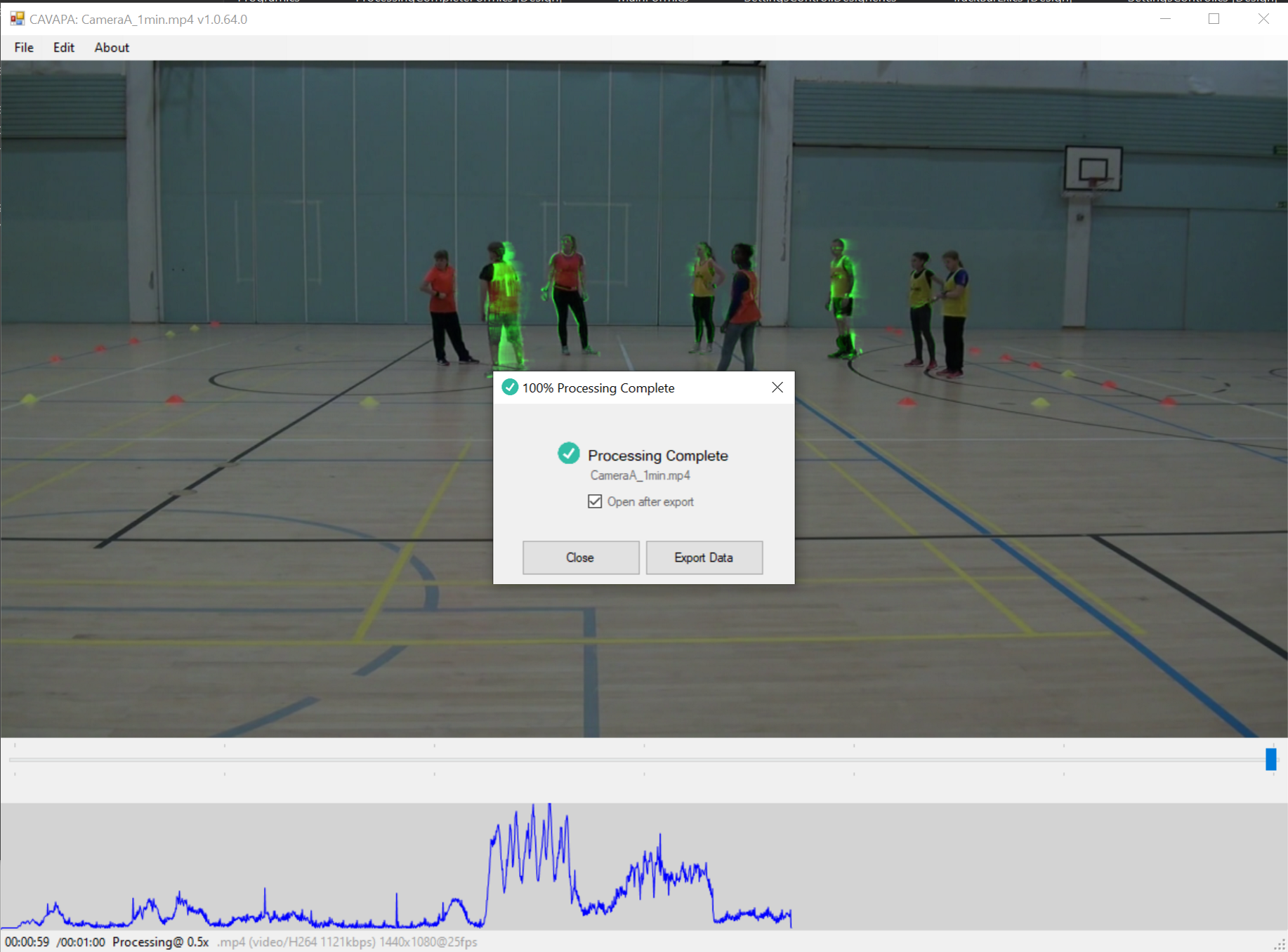

CAVAPA is a new method for processing video to compute a novel metric that describes the amount of physical exertion of the moving subjects within the video frame.

Note

CAVAPA's motion-metric has been validated compared with HR + accelerometer data and manual observational scoring. Full details will be added once the paper is published. In the meantime, see the Quantitative Validation section.

This is part of a research project with Greg Ruthenbeck PhD, Heidi Pasi, Prof. Taru Lintunen, Prof. Martin Hagger (and others) at Jyväskylä University, Finland.

This documentation and the CAVAPA source code is written and maintained by Greg Ruthenbeck and [contributors tba].

Test data is available via the CAVAPA Project page at the Open Science Foundation. This includes: video samples (input and output), accelerometer data, heart-rate monitor data for all participants of the validation study.

Abstract¶

CAVAPA simplifies the measurement of physical activity of groups. Sports science has long used video analysis for biomechanical insights. Other methods rely on specialist hardware like accelerometers or special cameras and motion-tracking markers. CAVAPA works on any video, with no strict requirements of camera-angle, lighting conditions, or video format.

Physical activity correlates with general wellbeing1. Hence, measuring the physical activity of groups is an important tool for quantifying the effectiveness of interventions aimed at improving general wellbeing. Existing methods for measuring the physical activity of groups are varied and have limitations such as requiring specialized devices, requiring manual processing, or being complex to apply to common scenarios.

We are in the process of publishing details of a study in which CAVAPA results are compared with accelerometer data and observational data (manual observational measures, using the VOST tool). Once the publication is available we will add a link to it here.

Quantitative Validation¶

CAVAPA has been compared with other methods of estimating/measuring physical-activity-levels for an indoor and an outdoor video. The data analyses were conducted using a Python Jupyter Notebook. The data-analyses compare manually-observed scoring, accelerometers, hear-rate monitors, with CAVAPA output.

- CAVAPA Validation Data Analysis CSV data & code on GitHub

- CAVAPA Validation Data Analysis live Jupyter Notebook

on binder.org)

In summary, the indoor test resulted in a ~0.75 correlation with manually-observed scores of physical activity, and the outdoor test a ~0.65 correlation. A subjective "eye-balling" of the time-series shows that physical-activity is measured by CAVAPA and is arguably better than manual observation (much easier and could actually be more accurate than other measures).

Installation¶

Video Pre-Processing¶

It may be necessary to pre-process video in order to garauntee the best-possible output from CAVAPA.

Interlaced Video¶

Many video cameras use interlaced video compression codecs. This will reduce the accuracy of CAVAPA if it is not removed. Fortunately, decoding the frames of the video can be done in FFmpeg with some additional command-line parameters via the YADIF - Yet Another De-Interlacing Filter.

ffmpeg -i input.mp4 -vf yadif=parity=auto output.mp4

|

|

|---|---|

| Interlacing causes striping | Properly decoded de-interlaced frame |

Consistent Frame-rate¶

To simplify calculations, all of our videos were processed at 25fps. Videos can be de-interlaced and converted to 25fps with a single command in FFmpeg. The command below also re-encodes the video using the HEVC codec with NVidia's GPU accelerated encoding for smaller files and minimal picture degradation.

ffmpeg -i in.mp4 -c:v hevc_nvenc -vf yadif=parity=auto -r:v 25 -c:a copy out.mp4

If only 25fps conversion is needed, use this command:

ffmpeg -i in.mp4 -r:v 25 -c:a copy out.mp4

Convert video into images¶

CAVAPA currently requires a folder containing only images as input. To convert a video into the required format, use the following command in FFmpeg.

ffmpeg -i my_video.mp4 -q:v 1 -qmin 1 -qmax 1 ./frames/my_video_%06d.jpg

Info

Ideally this step wouldn't be needed. We are working on a version of CAVAPA that processes the video directly. This step can be quite slow and requires enough storage space for the decoded frame images.

Post-Processing¶

Since CAVAPA processes videos as a squence of images (aka. frames), the output of CAVAPA is also frames (and a .csv data file). The output frame images can be converted to a video using the following commands.

Encoding CAVAPA output frames into a video¶

ffmpeg -i ./cavapa_frames/my_video_%06d.jpg my_video.mp4 -loglevel error

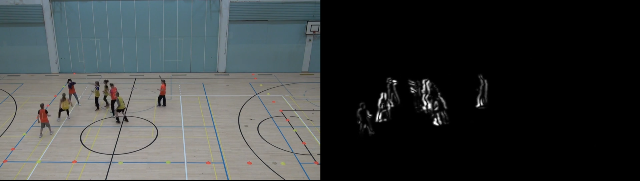

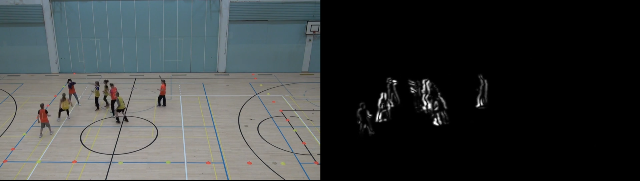

Combining Videos Side-by-Side¶

Test videos can be generated that show the input video alongside the CAVAPA-processed video.

ffmpeg -i videos/original.mpg -i cavapa_output.mp4 -filter_complex '[0:v]pad=iw*2:ih[int];[int][1:v]overlay=W/2:0[vid]' -map [vid] output-sxs.mp4

Documentation¶

This documentation is built using MkDocs Material Boilerplate.

Quick start¶

git clone https://github.com/gregruthenbeck/cavapa_docs.git

cd cavapa_docs

pipenv sync --dev

pipenv shell

inv serve --config-file cavapa_docs.yml -d localhost:8008

Dependencies¶

- Python 3.6 or higher

- pip

- pipenv

- mkdocs/mkdocs: Project documentation with Markdown - GitHub

- squidfunk/mkdocs-material: A Material Design theme for MkDocs

License¶

- MIT License

- The graduate cap icon made by Freepik from www.flaticon.com is licensed by CC 3.0 BY

About Author¶

-

Penedo, F.J., Dahn, J.R., 2005. Exercise and well-being: a review of mental and physical health benefits associated with physical activity. Curr. Opin. Psychiatry 18, 189–193. ↩